It is always interesting when you look at this planet of ours in a new way! Did anything surprise you in the figure?

0 Comments

In a previous post I used ChatGPT to generate a plot synopsis for a Star Trek episode that has never existed. It’s response was well organized, coherent, and a huge improvement over the previous artificial intelligence/machine learning (AI/ML) methods that I used for a similar project in 2018. Other groups have used ChatGPT to take law and business school classes where it has performed as a C+/B- level student. The newest version of ChatGPT, GPT-4, even passed the bar exam, scoring in the 90th percentile of test takers. Inspired by these case studies, below I describe how I applied ChatGPT to another human-centric decision-making exam: The U.S. Foreign Service Officer Test (FSOT) Situational Judgement Test (SJT).

U.S. Foreign Service Officers (FSO) are the diplomats who represent the United States abroad at embassies and consulates. Their mission is to “promote peace, support prosperity, and protect American citizens while advancing the interests of the U.S. abroad.” Becoming an FSO is a multistep process, but one of the initial steps is taking the FSOT which consists of one essay and three multiple choice sections: job knowledge, English expression, and situational judgement. The SJT is the focus of today’s experiment. From the Information Guide to FSO Selection: “The [SJT] is designed to assess an individual's ability to determine the most and least appropriate actions given a series of scenarios. The questions were written to assess precepts or competencies that are related to the job of a Foreign Service Officer, including Adaptability, Decision Making and Judgment, Operational Effectiveness, Professional Standards, Team Building, and Workplace Perceptiveness. (NOTE: Knowledge about the State Department's policies, procedures, or organizational culture is NOT required to answer these questions.)” Each question of the SJT presents descriptions of situations that an FSO might encounter on the job along with possible responses to that situation. For each scenario, test takers are to select the best and worst responses. Extensive blog posts have been written on how to approach these questions because, unlike job knowledge or English language, this section is perceived as less objective and, as the description and name imply, more based on judgement. This is what makes assessing an AI’s performance on this section the most interesting. Would AI have better judgement than an FSO? To approach this, I used a sample of 20 SJT questions from the internet with available answers. Here is one example SJT question: There are multiple tasks to be completed by the end of the day. Three people will be arriving to complete these tasks. What should you do? A. Make a detailed list of everything to do, and leave it for the three people to divide up among themselves. B. Make a detailed list for each person to do, ensuring each list is tailored to each person's strengths. C. Talk to each person and assign tasks based on their interests. D. Ask the first person who arrives to be in charge of coordinating the work with the other two. (answers below) I sent this question and the other 19 to ChatGPT (version 3.5 Turbo) in a few variations. In the first and simplest variation, I only gave the AI instructions to determine the best and worst responses. In the second variation, I gave the AI the context from the Information Guide to FSO Selection shown above in addition to the instructions. In the third and final variation, I gave the same information as the second variation but used a slightly different model, version 3.5 Turbo-16K (at the time of writing GPT-4 was not available to the author via the API). Overall, the third variation with the full context and the larger model scored the most points (70%; 28 out of a possible 40) followed by the second variation (65%; 26/40) and the first variation (57.5%; 23/40). There was a lot of variation between the models and the third variation answered some questions incorrectly that the other variations answered correctly. In one extreme example, variations two and three got both parts of the question incorrect while variation one got one part correct. Embarrassingly, for this question the second variation thought that the best answer was the worst answer. On another occasion, variations one and two got both parts correct but variation three guessed incorrect on the worst response. Finally, on the example question given above, only the third variation got the correct answer with the other two variations getting opposite parts of the question correct. These variations are due to the non-deterministic nature of ChatGPT. Because of this randomness, it would be wise to run each variation multiple times to get average scores for each. Why didn’t I do this? While there is some financial cost associated with running these models, that was not the rate limiting step that prevented these additional tests (this whole post only cost $0.08). The real cost was time. Despite several efforts, ChatGPT was always too verbose in its response. It gave too much context to its answers making it time consuming to figure out the results of each run for the 20 questions. More trial and error with prompt optimization would eventually produce more streamlined answers (deeplearning.ai has a good online course on this topic that informed much of this work). So, would ChatGPT be an FSO with sound judgement? We don’t know! The test scores are normalized to the average of other test takers, and we do not know what a typical passing score on this part of the test is. With a score of 70%, it is possible that the third variation could be in contention. The fact that all three variations were able to score so highly at all on multipart, multiple-choice questions on complex social scenarios is a testament to how far this technology has advanced. The SJT portion of the FSOT is the most interesting test or ChatGPT because judgement is the skill that is the most "human" of the three test components. There are already AI tools to check spelling, commas, and other English grammar points. ChatGPT was trained on a broadcast of knowledge and would likely be able to pass the fact-based job knowledge portion of the exam. Taken together with this test, I am confident that ChatGPT could pass the FSOT. However, the FSOT is only one part of the FSO application. What would an AI write for its personal narratives and previous job experience? How would it participate in the in-person oral exam? Furthermore, this experiment did not evaluate if ChatGPT would be effective at determining who gets a visa, representing government interests in a negotiation, or visiting a citizen in prison. It cannot fully replace a human. But just because it cannot do all aspects of the job, does not mean that it would not be useful. This experiment demonstrates that ChatGPT and similar LLMs may be able to be more supportive in diplomatic work than one might naively assume. The zeitgeist is grappling with whose jobs are going to be taken by AI in the near and far future. Are Diplomats unreplaceable in the long run? Future iterations of ChatGPT-like AI may be able to handle all diplomatic work without human intervention, but not now. However, this work shows that AI today is capable of applying judgements to social situations, potentially making it more useful than one might first assume. The real short-term risk is not that diplomats are replaced by AI, but rather that diplomats do not embrace AI as a force multiplier and get left behind in this new chapter of our AI-enabled world. In 2018, I trained a recurrent neural network—a type of artificial intelligence/machine learning (AI/ML)—on all the plots of Star Trek (TNG, DS9, and VOY). The proposed goal was to have the network create new and exciting plots based off the characters, places, and themes of the original episodes. After training for 24 hours, I asked the neural network to generate new text based on what it had “learned.” As you can read in my previous post, the output was more or less Star-Trek-Themed nonsense. While the network output words in an order that approximated sentences, there was no sense of plot or structure in the output. However, at the time this was indicative of the cutting edge AI/ML technologies available. And in a world without new Star Trek it was an entertaining nostalgic diversion.

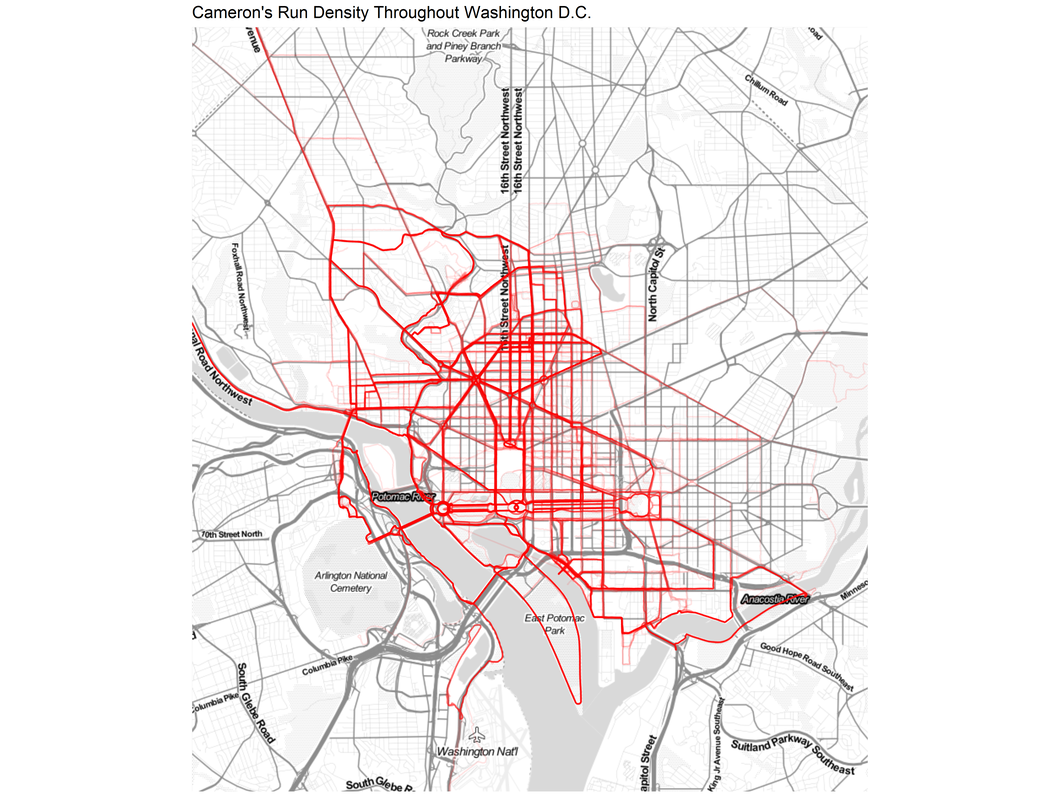

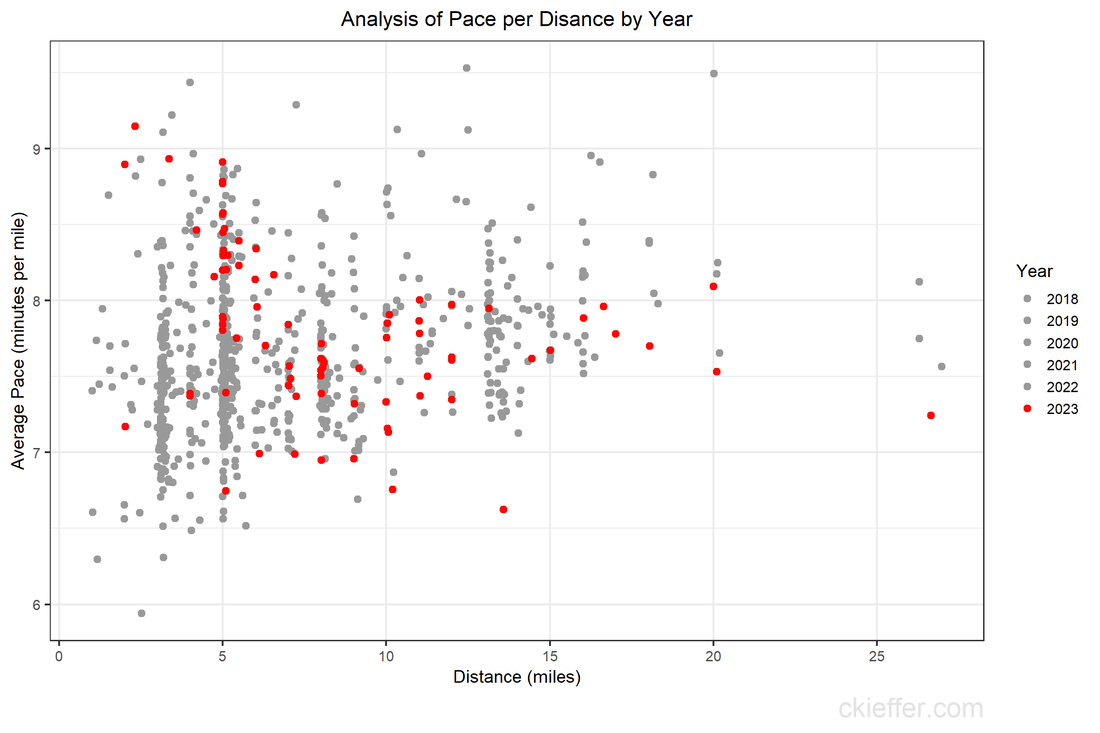

Since that prior post two things have happened. First, in 2023 the media-consuming public are in the midst of something like a Star Trek renaissance with several series—Discovery, Picard, Prodigy, Below Decks, and Strange New Words—all bringing new ideas to the Star Trek canon. Second, more recently and more crucially, ChatGPT has been released by OpenAI. This new tool makes generating text using AI significantly easier and more intuitive. My original recurrent neural network experiment was extremely cumbersome. It required 24 hours of training on my personal laptop. It required Python code to extract the plot synopses, train the model, and request the output. After all that the model produced output letter-by-letter that was not usable for the desired purpose. By contrast, the ChatGPT tool is both more useful and more usable. ChatGPT is a generalized Large Language Model that the OpenAI team has pretrained on enormous servers, giving it far more training than my laptop ever could. It can generate responses to complex prompts that incorporate prior context and knowledge from the internet. On top of that, it can be accessed by anyone for free via an easy to use chat-like interface instead of complex Python code. To demonstrate how much better the ChatGPT output is, here is one sample synopsis that ChatGPT produced for me when given the prompt, “Write a 100 word plot synopsis for an episode of the television show Star Trek Deep Space 9 that has never existed.” “Title: Shadows of the Past In the unexplored Gamma Quadrant, the crew of Deep Space 9 investigates a planet trapped in a repeating temporal loop. They discover the Zynthians, a technologically advanced species, who made a pact with a malevolent entity, leading to their downfall. The crew must break the cycle and free the Zynthians, facing temporal distortions and moral dilemmas. Unity and forgiveness become the key to redemption as they race against time. This thought-provoking episode explores the consequences of choices and the power of hope, reminding us that even in darkness, understanding can pave the way for a brighter future.” This response demonstrates some key improvements over the text that my recurrent neural network generated. The plot follows conventional story structure and includes the same alien species throughout (the Zythians). It generated a relevant title based on the plot. ChatGPT knew that Star Trek: DS9 takes place in the fictional Gamma Quadrant and a longer version of this synopsis included the names of the main characters of the show. Recognizing that Star Trek episodes usually have a moral or philosophical theme it emphasized that this episode focuses on “the consequences of choices and the power of hope.” Overall, this is a monumental improvement over my previous attempts and demonstrates that utility and usability of ChatGPT in generating text. In 2018, leading experts in the field were effusive in their praise for recurrent neural networks and how great they were. Now, five years later, the AI community has taken a dramatic leap forward. Despite these improvements, the output is far from perfect. Longer versions of the response had conflicting themes and ChatGPT's output are necessarily derivative of existing Star Trek works. At the time of writing, there is an ongoing writer's strike. It is important to recognize that while AI has improved dramatically, it cannot replace real writers. It remains a tool, that is useful but not creative or emotive. However, given the strides in development, it is not unreasonable to think that the next update to this blog post in 2028 will feature AI-generated plots, scripts, audio, and video all at the push of a button. Whether those are any good, remains to be seen. But, at that point it may feel more like we are living in the Star Trek universe rather than writing fiction about it. During the height of the COVID-19 pandemic, everyone was trapped indoors. Various jurisdictions had different policies on appropriate justifications for venturing outside. I vividly remember the DC National Guard being deployed to manage the flow of people into the nearby fish market. However, throughout the pandemic, outdoor and socially distant running or exercising was generally allowed. Because the gyms were closed and the outdoors were open, my miles run per week shot up dramatically. I even wrote a blog post about it in April 2020. Now the pandemic has subsided, but I the running has not. In fact, I’m running more than ever in a hunt for a Boston Marathon qualifying time. During this expanded training, and throughout the past three years, I have run many of the local DC streets. I have updated the running heatmap that I published in 2020 after several thousand additional miles in the District. I’ve been told the premium version of the Strava run tracking phone app will make a similar map for you. This data did come from Strava, but I’ve created my own map on the cheap using a pinch of guile (read: R and Excel). This version of the map features a larger geographic area to capture some of the more adventurous runs that I have taken to places like the National Arboretum, the C&O Trail, and down past DCA airport. The highest densities of runs are along the main streets around downtown, Dupont Circle, and the National Mall. Rosslyn and south of the airport were two areas that I wanted to explore more in my 2020 post, both of which show up in darker red on this new figure. To complement the geographic distribution of runs is a visual distribution of average pace vs. run distance. This was a challenge to analyze because the data and dates were downloaded in Spanish (don’t ask), but eventually I was able to plot each of the 798 (!) DC-based runs over the past five years. The runs in 2023 are highlighted in red. Luckily, the analysis reveals that many of the fastest runs for each distance are in the past 6 months. Progress! My rededication to running at a more relaxed pace for my five mile recovery runs is also visible. The next step is to fill in the space this figure with red dots for the rest of 2023 with the ultimate goal of placing a new dot in the bottom right corner by 2024. Wish me luck.

|

Archives

July 2023

Categories

All

|

RSS Feed

RSS Feed