|

In 2018, I trained a recurrent neural network—a type of artificial intelligence/machine learning (AI/ML)—on all the plots of Star Trek (TNG, DS9, and VOY). The proposed goal was to have the network create new and exciting plots based off the characters, places, and themes of the original episodes. After training for 24 hours, I asked the neural network to generate new text based on what it had “learned.” As you can read in my previous post, the output was more or less Star-Trek-Themed nonsense. While the network output words in an order that approximated sentences, there was no sense of plot or structure in the output. However, at the time this was indicative of the cutting edge AI/ML technologies available. And in a world without new Star Trek it was an entertaining nostalgic diversion.

Since that prior post two things have happened. First, in 2023 the media-consuming public are in the midst of something like a Star Trek renaissance with several series—Discovery, Picard, Prodigy, Below Decks, and Strange New Words—all bringing new ideas to the Star Trek canon. Second, more recently and more crucially, ChatGPT has been released by OpenAI. This new tool makes generating text using AI significantly easier and more intuitive. My original recurrent neural network experiment was extremely cumbersome. It required 24 hours of training on my personal laptop. It required Python code to extract the plot synopses, train the model, and request the output. After all that the model produced output letter-by-letter that was not usable for the desired purpose. By contrast, the ChatGPT tool is both more useful and more usable. ChatGPT is a generalized Large Language Model that the OpenAI team has pretrained on enormous servers, giving it far more training than my laptop ever could. It can generate responses to complex prompts that incorporate prior context and knowledge from the internet. On top of that, it can be accessed by anyone for free via an easy to use chat-like interface instead of complex Python code. To demonstrate how much better the ChatGPT output is, here is one sample synopsis that ChatGPT produced for me when given the prompt, “Write a 100 word plot synopsis for an episode of the television show Star Trek Deep Space 9 that has never existed.” “Title: Shadows of the Past In the unexplored Gamma Quadrant, the crew of Deep Space 9 investigates a planet trapped in a repeating temporal loop. They discover the Zynthians, a technologically advanced species, who made a pact with a malevolent entity, leading to their downfall. The crew must break the cycle and free the Zynthians, facing temporal distortions and moral dilemmas. Unity and forgiveness become the key to redemption as they race against time. This thought-provoking episode explores the consequences of choices and the power of hope, reminding us that even in darkness, understanding can pave the way for a brighter future.” This response demonstrates some key improvements over the text that my recurrent neural network generated. The plot follows conventional story structure and includes the same alien species throughout (the Zythians). It generated a relevant title based on the plot. ChatGPT knew that Star Trek: DS9 takes place in the fictional Gamma Quadrant and a longer version of this synopsis included the names of the main characters of the show. Recognizing that Star Trek episodes usually have a moral or philosophical theme it emphasized that this episode focuses on “the consequences of choices and the power of hope.” Overall, this is a monumental improvement over my previous attempts and demonstrates that utility and usability of ChatGPT in generating text. In 2018, leading experts in the field were effusive in their praise for recurrent neural networks and how great they were. Now, five years later, the AI community has taken a dramatic leap forward. Despite these improvements, the output is far from perfect. Longer versions of the response had conflicting themes and ChatGPT's output are necessarily derivative of existing Star Trek works. At the time of writing, there is an ongoing writer's strike. It is important to recognize that while AI has improved dramatically, it cannot replace real writers. It remains a tool, that is useful but not creative or emotive. However, given the strides in development, it is not unreasonable to think that the next update to this blog post in 2028 will feature AI-generated plots, scripts, audio, and video all at the push of a button. Whether those are any good, remains to be seen. But, at that point it may feel more like we are living in the Star Trek universe rather than writing fiction about it.

0 Comments

First go visit willrobotstakemyjob.com. Will you lose your job to robots? A lot of articles and think pieces recently have touted the artificial intelligence (AI) revolution as a major job killer. And it probably will be...in a few decades. One of the most commonly studied AI systems are neural networks. In this post I want to demonstrate that, although neural networks are powerful, they are still a long way away from replacing people.

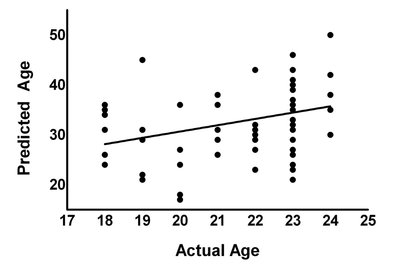

Some brief background: All types of neural networks are, wait for it, composed of neurons. Similar to the neurons in our brains, these mathematical neurons are connected to each other. When we train the network, by showing it data and rating its performance, we teach it how to connect these neurons together to give us the output that we want. It's like training a dog. It does not understand the words that we are saying, but eventually it learns that if it rolls over, it gets a treat. This video goes into more depth if you are curious.[1] Conventional neural networks take a fixed input, like a 128x128 pixel picture, and produce a fixed output, like a 1 if the picture is a dog and a 0 if it is not. A recurrent neural network (RNN) works sequentially to analyze different sized inputs and produce varied outputs. For instance, RNNs can take a string of text and predict what the next letter should be, given what letters preceded it. What is important to know about them is that they work sequentially and that gives them POWER. I originally heard about these powerful RNNs from a Computerphile video where they trained a neural network to write YouTube comments (even YouTube trolls will be supplanted by AI). The video directed me to Andrej Karpathy’s “The Unreasonable Effectiveness of Recurrent Neural Networks”. Karpathy is the director of AI at TESLA and STILL describes RNNs as magical. That is how great they are. His article was so inspiring that I wanted to train my very own RNN. Luckily for me, Karpathy had already published a RNN character-level language model char-rnn [2]. Essentially it takes a sequence of text and trains a computer program to predict what character comes next. With most of the work setting up the RNN system done, the only decision left was to decide what to train the model on. Karpathy's examples included Shakespeare, War and Peace, and Linux code. I wanted to try something unique obviously and because I'm a huge fucking nerd I choose to scrape the Star Trek: Deep Space 9 plot summaries and quotable quotes from the Star Trek wiki [3]. Ideally, the network would train on this corpus of text and generate interesting or funny plot mashups. However, after training the network on the DS9 plot summaries and quotes, I realized that there was not enough text to train the network well. The output was not very coherent. The only logical thing to do was to gather more Star Trek related content, namely, the text from The Next Generation and Voyager episode wiki pages. After gathering the new text, the training data set had a more respectable 1,310,922 words (still small by machine learning standards). [Technical paragraph] The network itself was a Long-Short Term Memory (LSTM) network (a type of RNN). The network had 2 layers, each with 128 hidden neurons (these are all the default settings by the way). It took ~24 hours to train the RNN. Normally neural network scientists use specialized high-speed servers. I used my Surface Pro 3. My Surface was not happy about it. "Show us the results!" Fine. Here is some of the generated text: "She says that they are on the station, but Seven asks what she put a protection that they do anything has thought they managen to the Ompjoran and Sisko reports to Janeway that he believes that the attack when a female day and agrees to a starship reason. But Sisko does not care about a planet, and Data are all as bad computer and the captain sounds version is in suspicions. But she sees an office in her advancement by several situation but the enemy realizes he had been redued and then the Borg has to kill him and they will be consoled" Not exactly Infinite Jest, but almost all of those are real (Star Trek) words. Almost like a Star Trek mashup fever dream. Who are the Ompjoran? Why doesn't Sisko care about a planet? What is a starship reason? It all seems silly but what is amazing about this output is that the RNN had to learn the English language completely from scratch. It learned commas, periods, capitalization and that the Borg are murderous space aliens. One variable that I can control is the "temperature" of the network output. This tells the RNN how much freedom it has in choosing the next character in a sequence. A high temperature allows for more variability in the results. A temperature close to zero always chooses the most likely next character. This leads to a boring infinite loop: "the ship is a security officer and the ship is a security officer and the ship is a security officer and the ship is a security officer and the ship is a security officer" Here is an example of some high-temperature shenanigans. Notice how, like a moody teen, it does whatever it wants: "It is hoar blagk agable,. Captainck, yeve things he has O'Brien what she could soon be EMH 3 vitall I "Talarias)" If you want to read more RNN generated output, I have a 15,000 character document here. At one point it says "I want to die" which is pretty ominous. Seriously check it out. For future reference, Star Trek may actually be a bad training set. Many of the words in the show are made up so the network can be justified in also making up words. Hopefully it is clear to all the Star Trek writers reading this that your jobs are safe from artificial intelligence. For the rest of you, your jobs are probably pretty safe too. For now. William Riker [a human]: "You're a wise man, my friend." Data [an android]: "Not yet, sir. But with your help, I am learning." [1] If you are really curious about neural networks this free online book is a good resource. [2] I actually used a TensorFlow Python implementation of Karpathy’s char-rnn code found here. [3] You can find my code and input files on GitHub here. How old are you? How old does your computer think you are? That is the question Corom Thompson and Santosh Balasubramanian over at Microsoft have been thinking about. With Big Data it probably is not too tough for a computer to crunch the websites you visit and the purchases you make online to spit out a reasonable guess for your age. That’s how targeted advertising works. But what if computers only had pictures of you? Behold how-old.net. Just like that carnival “game,” can a computer guess your age by just how you look? Um…I guess not. But this was only one trial, so I decided to try again…seventy-five more times. All with pictures of myself. This quickly devolved into quite a narcissistic project. This involved delving into the photos on my Facebook profile which proved to be both a hilarious and horrific endeavor. After shamefully mining my own photographs I ran a simple regression to get a rough idea of how the algorithm was doing.

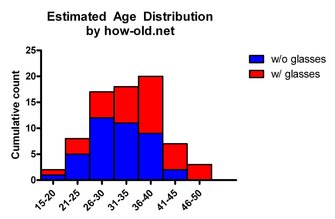

Just for fun I decided to break the images into pictures where I was wearing glasses and pictures where I was not. If the algorithm is reading facial features glasses could be affecting the results. This divided the images almost exactly in half.

|

Archives

July 2023

Categories

All

|

RSS Feed

RSS Feed